Malte's Project Showcase

Malte's Project Showcase

Technologies: Python, Numpy, MatplotLib, LaTeX, PyTorch, Conda

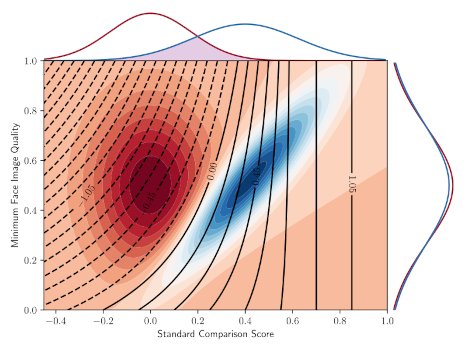

Description: Bachelor thesis. Using quality values from MagFace in the Comparison function to improve recognition performance. In the graphic below we can see a modelled face recognition scenario. We consider pairs of face images that either belong to the same person (genuine pairs) or to different persons (imposter pairs). For each pair we compute the standard comparison score (Cosine Similarity) and take the minimum of the two quality values. When plotting the genuine and imposter distribution we can see that the ideal decision boundary follows a curve because low-quality image pairs with a slightly below average comparison score are likely genuine pairs. However, when thresholding on the standard comparison score, we can not make use of this observation, for this reason we proposed the QMagFace comparison score.

Technologies: JavaScript, Python

Description: Side project. I wanted to learn new algorithms for Megaminx to make further improvement. However, I was unhappy with the existing tools. So, I took it upon myself to make an improved version, building upon what was already there. Once I was finished with the Megaminx algorithm trainer, I added alg-trainers for different events as well, resulting in 7 different trainers for 4 different puzzles, Megaminx, Skewb, Pyraminx and 2x2.

Technologies: Python, PyTorch, Detectron2, LaTeX

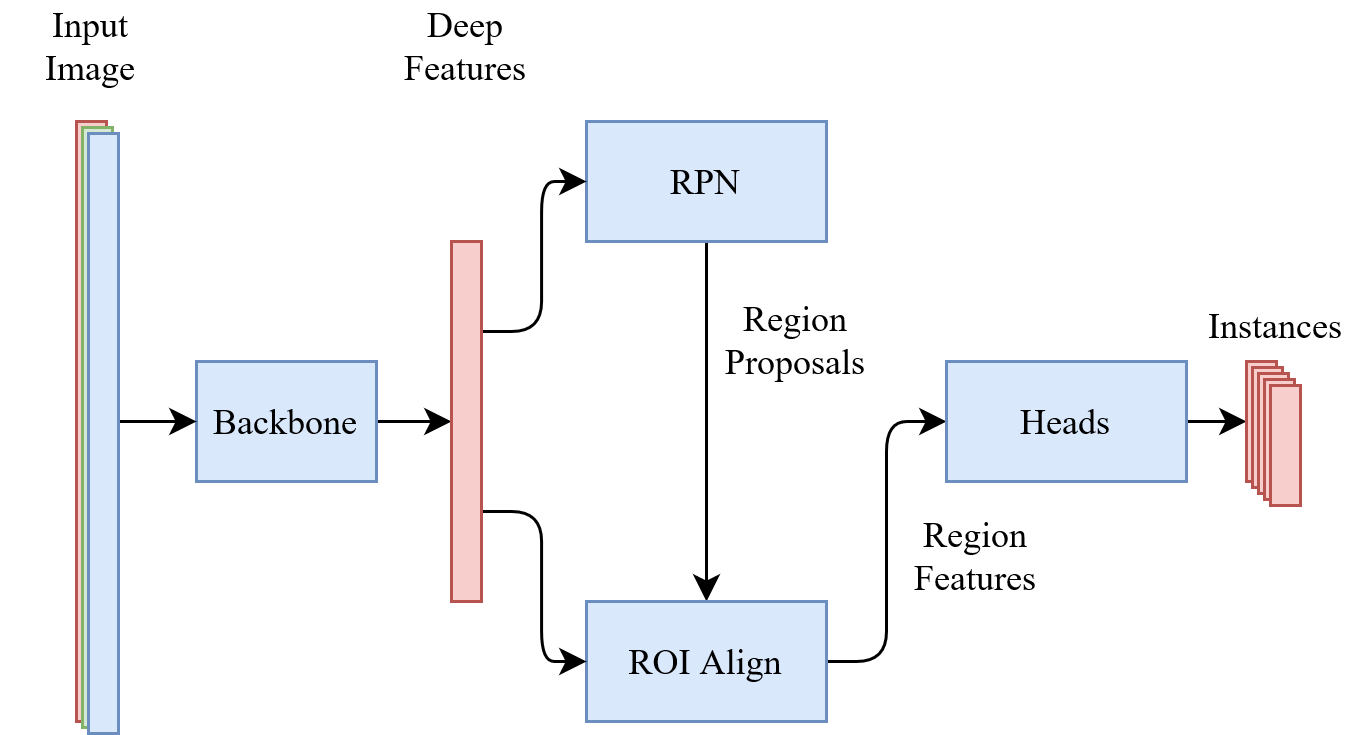

Description: University Project. Havasi et al propose a new technique to train Deep Ensembles using just a single network. We followed the approach of Cygert et al 2021 who applied MIMO ensembling to Faster R-CNN and applied this ensembling technique to Mask R-CNN. Unfortunately the results left a bit to be desired. The proposed network architecture can be seen here:

Technologies: C++, CMake, OpenMP, Julia

Description: Side project. For the speedcubing discipline "Fewest Moves Challenge" there is a solving technique called Domino Reduction explained in much detail by Herbert Kociemba, it is the first step of the two-phase algorithm for solving a 3x3 Rubik's Cube. Because humans usually can't easily find a direct solution after Domino Reduction (DR), people started further reducing the subset to just 180° turns [U2, D2, R2, L2, F2, B2]. This is called Half Turn Reduction (HTR), together with another friend we wanted to figure out "What is the distrubtion of optimal moves to achieve HTR when considering all possible starting DRs". To answer this question we wrote a solver in Julia, and then ported it to C++, adding multi-threading to speed up the processing.

Technologies: C++, Python, Numpy, PyTorch, LibTorch, OpenMP, OpenCV

Description: University Project. GIF is a common file format to come across, especially on the web. However, to save an image as a GIF, one has to define a palette with a maximum number of 256 colors, no other colors can be used in the image. Because of this, there is usually a significant loss in quality when converting to GIF. To mitigate this, we can use color dithering. The most common technique for color dithering is the Floyd-Steinberg Dithering algorithm. In this University project I implemented and extended a modern version of the "Dithered Color Quantization" paper by Buhmann et al.

8 Color Quantization

Original Image

Technologies: Rust

Description: Side Project. A friend asked me a question about calculating a combinatorial value. After solving the problem I joined in on his project by implementing a competing Simple Tafl solver. Simple Tafl is a version of the board game Hnefatafl with a simplified ruleset. By additionally decreasing the board size, we hoped to decrease the complexity enough to make it fully solvable.

Technologies: Cuda, C++, Python

Description: University project. The Extra-P project aims at making it easier to identify scaling bugs by modelling program performance as a multi-dimensional function of a number of parameters. Possible parameters could be anything related to problem size or the number of cores solving the problem. One option to find the closest match of a performance model is to try all combinations of parameter multiplication and addition and subsequently solving for the best coefficients. However, trying out all combinations grows exponentially as we increase the number of parameters. As part of this university project we took existing Python code implementing multi-parameter performance modelling and ported it to C++ with CUDA. Due to a minimal representation of the problem at hand and intensive performance optimization we were able to achieve a speedup of up to 100'000x depending on GPU model and the number of parameters.

Technologies: Python, Numpy, Tensorflow, Qt

Description: University project. As part of their bachelor thesis another student implemented a Tensorflow model which can reconstruct maksed parts of a face. In this university project we implemented a program to interactively demonstrate the face-completion algorithm of the bachelor student. For the UI we used python Qt bindings.

Technologies: Python, Numpy, Tensorflow, Qt, MxNet

Description: University project. Because we finished the "Face Completion Demonstrator" early we started a second project as part of the same university course. In this project we implemented a simple UI where a face detection system scans the current webcam feed and draws bounding boxes around each detected face. Additionally, a quality score is computed following Terhörst et al 2020 and visualized by coloring the boxes. It is then also possible to enroll new faces into the system and the demonstrator will recognize any enrolled person.